- Get link

- X

- Other Apps

- Get link

- X

- Other Apps

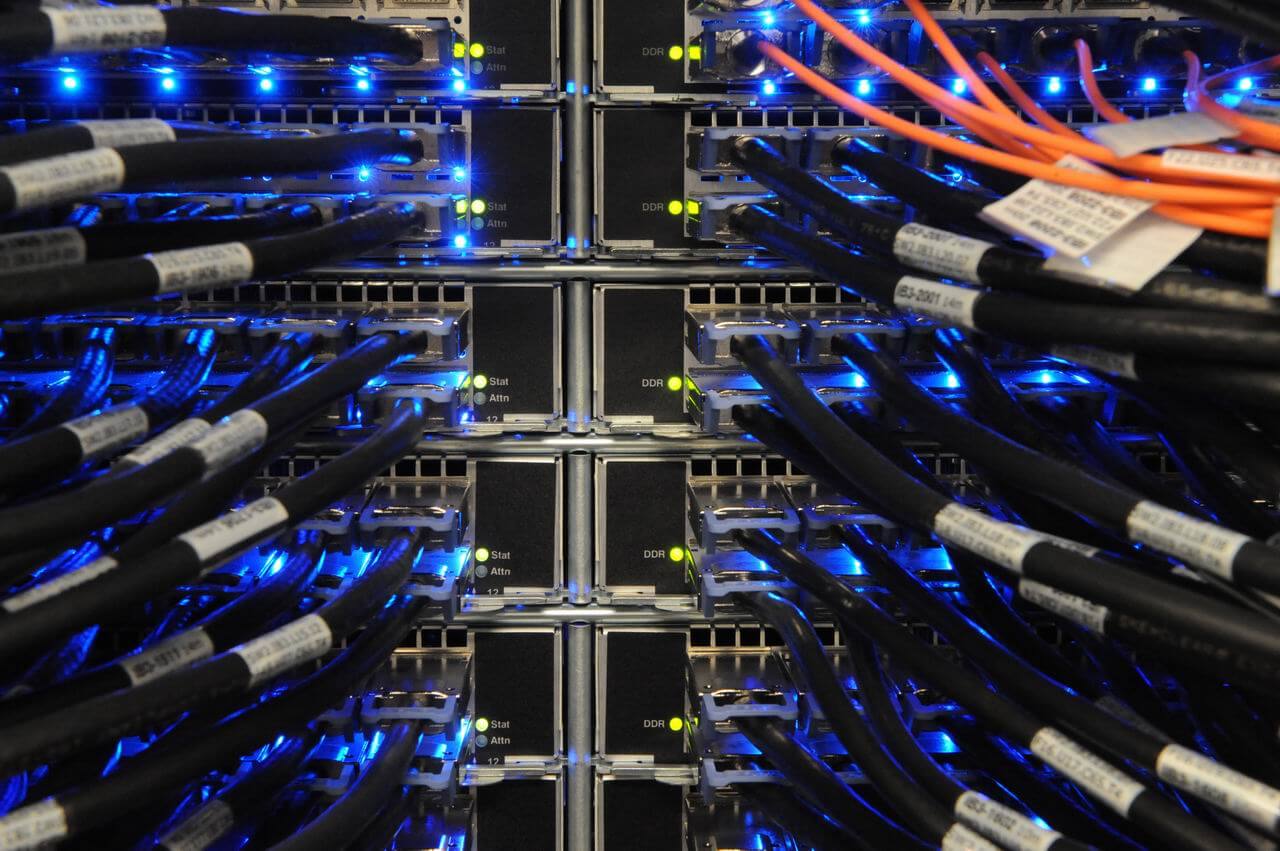

As our devices become more and more tied up on the Internet, and we gradually move away from the paradigm of personal computers, supercomputers appear less and less to us as cumbersome and insane devices with thousands of cooling boxes and an orderly heap of processors. But giant parallel systems - which are similar to these oldest supercomputers - are still being developed.

Most of us know what Moore's law is, which basically says that the power of computer chips doubles every 1.5-2 years. This applies not only to our computers and smartphones. All our electronic devices follow the path of improvement: the processing speed increases, the sensitivity of the sensors, memory and even the pixels of the screen or camera get better and better. Chips can improve and decrease to unimaginable limits, until quantum effects begin to introduce dissonance. Some experts believe that within the next 50 years, Moore's law will still limit and slow down the development of computer chips. Not surprisingly, the largest chip manufacturers are already beginning to look for an alternative.

Our phones and tablets can still surprise us for a long time doubling every two years the power, but behind the scenes there are things that require more - "clouds", supercomputers, smallest computing systems. Let's try to guess which way the supercomputers of the future will go and how this will affect the overall computing power of our planet.

Exaflops and beyond

Miniaturization of the chip components is only half the battle. On the other side of the coin, supercomputers need a special assembly for increasing power. In 2008, IBM Roadrunner overcame the limit of one petaflops: one quadrillion operations per second. (Flops means "the number of floating point operations per second", and this is the standard that we use when speaking of supercomputers that are used for scientific calculations).

In scientific terms, petaflops is measured in 10 ^ 15 operations per second. The exaflop computer - which will appear in 2019, according to different forecasts - will have a performance of 10 ^ 18, a thousand times more than the petaflops computers that we see today. For comparison: the most powerful of the 500 fastest supercomputers in the world as of 2014, "Tianhe-1A" has a performance of less than 60 petaflops. By 2030, supercomputers should gain performance in zetaflops, or 10 ^ 21 operations per second, and then jotaflops, or 10 ^ 24.

What do these numbers really mean? It is assumed that a complete simulation of the computer brain will be possible by 2025, and zetaflops computers can accurately predict the entire weather on the planet in two weeks.

Green supercomputers

Any power has its own price. If the cooler of your computer ever broke down or you were sitting with your laptop on your lap, you know what power is worth: computers are heated during operation. In fact, one of the main problems of supercomputer developers is related to finding a reasonable way to install and use powerful machines without crashing or damaging the planet. In the end, one of the main purposes of modeling weather conditions will be to manage carbon monoxide emissions, so it would not be very wise to add climatologists problems in the process of solving these problems.

The computer works extremely badly if it overheats. Any computer system is as useful as it works in its worst days, so cooling hot chips is of great interest to engineers. More than half of the energy used by supercomputers goes to cooling. And environmental problems are already causing serious concern in the light of increasing computer performance. Green solutions and energy efficiency have long been the backbone of every supercomputer project.

From cooling, "free air" - that is, engineers are trying to bring external air to the system - to hardware structures that increase the surface area of the system, scientists try to be as innovative as possible to improve the cooling efficiency of supercomputers. One of the most interesting ideas that are trying to implement is cooling the system with a liquid that will collect heat as it flows through the pipes in the computer itself. Projects that are included in the top 500 most powerful supercomputers take this very seriously.

Addressing the problems of ecology and efficiency is not only useful for our planet, but also necessary for the operation of machines. Perhaps this is not the most interesting item on our list, so let's move on.

Artificial brain

Let's talk about what should happen between 2025 and 2030, when supercomputers can map the human brain. In 1996, a scientist from Syracuse University estimated that our brains have a memory of 1 to 10 terabytes, an average of about 3. Of course, it is extremely appropriate to note that our brains are not working like computers. But over the next 20 years, computers have to earn as much as our brains.

Just as supercomputers are extremely useful in mapping the human genome, solving medical problems in the other, accurate models of the human brain will greatly facilitate the diagnosis, treatment and understanding of the complexities of human thought and emotions. In combination with visualization technology, doctors will be able to identify problem areas, model different forms of treatment and even get to the root of many issues that have plagued us since the beginning of time. Implantable and inoculated chips and other technologies will help to observe and even change the level of serotonin and other neurotransmitters to improve mood and general emotional state, and the inadequate operation of individual parts of the brain in the process of trauma, for example, can be eradicated altogether.

In addition to the successes in medicine that supercomputers promise us, there is also a question of artificial intelligence. Already, mid-performance computers can learn some of the possibilities of artificial intelligence, among which the clever system of selecting the recommendations of books and television programs is at the very least. Imagine an Internet doctor who can replace a real doctor and even a whole consultation of the best doctors in the world.

Weather systems and complex models

By 2030, as expected, the Zetaflop supercomputers will be able to accurately simulate the entire weather system of the Earth in two weeks. We are referring to a 100 percent accurate model of our entire planet and the ecosphere, with local and global predictions available at the push of a button. If you do not talk about obviously useful things - predictions of natural disasters, for example - imagine that your vacation or wedding will not be spoiled by the weather, as you will know it for a few weeks in advance.

The Earth's climate is such a complex system that it is often discussed in connection with the theory of chaos, the complexity of which some of us even can not imagine. The question of whether a wingspan of a butterfly's wing in Brazil can cause a tornado in Texas will cease to exist. In short, it is difficult even to imagine a more complex system of a larger scale than the weather of our planet.

Production of products and agriculture, the impact of weather on other large-scale scientific projects (polar expeditions or launching spacecraft), prediction of natural disasters are just a few saving options that will offer us computing power.

And of course, the weather system is just the tip of the iceberg. If you can perfectly represent the weather conditions, you can just as easily recreate any complex and large system.

Imitation of the world

Most of us are familiar with online games and can remember when life simulators like Second Life or The Sims were in vogue. Virtual reality has always been a favorite subject of science fiction. But if you multiply it by the capabilities of supercomputers, games and virtual environments can become more than just entertainment.

Although the development of such a "Matrix" will certainly impress, the transfer of our daily cultural and social changes for the purpose of monitoring and forecasting them can be extremely useful for our unstable society. Imagine how to simplify the issues of urban planning, development of new areas, uneven distribution of food and resources.

Supercomputers will not guess at the coffee grounds: they will receive information from all possible sources - from the latest tweets in the top to the use of the energy network at the moment - and create models that will help regulate not only current factors, but future plans. Deficiency of gas, electricity, water, the systematic use of these resources and the provision of energy-scale events like the Olympics will stop worrying people.

With the wireless Internet that has captured countries and the whole world, the high-quality model of our world will at one time not be different from the world in which we live. Only now we are starting to implement all these opportunities that would not have been available without supercomputers.

The article is based on materials .

- Get link

- X

- Other Apps

Comments

Post a Comment