- Get link

- X

- Other Apps

- Get link

- X

- Other Apps

Last month, mankind lost an important battle with artificial intelligence - then AlphaGo defeated champion Ki Jae with a score of 3: 0. AlphaGo is a program with artificial intelligence developed by DeepMind, part of the parent company Google Alphabet. Last year, she beat another champion, Lee Sedola, with a score of 4: 1, but since then she has gained significantly on points.

Ki Jae described AlphaGo as the "god of the game of go."

Now AlphaGo finishes playing games, allowing players, as before, to fight among themselves. Artificial intelligence has acquired the status of a "player from the distant future", to the level of which people will have to grow for a very long time.

At the start, attention, go

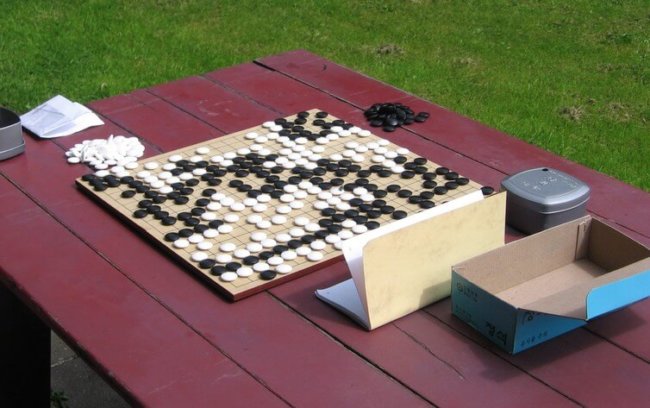

Go is an ancient game for two, where one plays white figures, the other is black. The task is to capture the domination on the board, divided into 19 horizontal and 19 vertical lines. Computers play go harder than chess, because the number of possible moves in each position is much greater. This makes the miscalculation of possible moves in advance - quite possible for computers in chess - very difficult to go.

The breakthrough of DeepMind was the development of a general learning algorithm, which, in principle, can be directed in a more socially oriented direction than go. DeepMind says that AlphaGo's research team is trying to solve complex problems, such as finding new treatments for diseases, drastically reducing energy consumption or developing new revolutionary materials.

"If the AI system proves that it is capable of acquiring new knowledge and strategies in these areas, the breakthroughs will be simply indescribable. I can not wait to see what happens next, "says one of the project scientists.

In the future, this is fraught with many exciting opportunities, but the problems have not yet disappeared.

Neuroscience and artificial intelligence

AlphaGo combines two powerful ideas on the topic of training, which have developed over the past few decades: in-depth training and reinforcement training. Remarkably, both directions have come out of the biological concept of work and learning the brain in the process of gaining experience.

In the human brain, sensory information is processed in a series of layers. For example, visual information is first transformed in the retina, then in the middle brain, and then passes through various areas of the cerebral cortex.

As a result, a hierarchy of representations appears, where simple and localized details first, followed by more complex and complex features.

Equivalent in AI is called deep training: deep, because it includes many layers of processing in simple neuron-like computing units.

But in order to survive in this world, animals need not only to recognize sensory information, but also to act in accordance with it. Generations of scientists and psychologists have studied how animals learn to take action in order to maximize the benefits to be gained and the reward they receive.

All this led to mathematical theories of training with reinforcement, which can now be implemented in AI systems. The most important of them is the so-called TD-training, which improves actions by maximizing the expectation of a future reward.

The best moves

Through a combination of in-depth training and reinforcement training in a series of artificial neural networks, AlphaGo first learned to play at the level of a professional player in go based on 30 million moves from games between people.

But then he started to play against himself, using the outcome of each game to inexorably sharpen his own decisions about the best move in each position on the board. The value system of the network has learned to predict the likely outcome taking into account any position, and the system of prudence of the network has learned to make the best decision in each specific situation.

Although AlphaGo could not test all possible positions on the board, neural networks learned key ideas about strategies that work well in any position. It is these countless hours of independent play that led to the improvement of AlphaGo over the past year.

Unfortunately, there is as yet no known way to find out from the network what these key ideas are. We can just study games and hope that something will be extracted from them. This is one of the problems of using neural algorithms: they do not explain their decisions.

We still do not understand much about how the biological brains are trained, and neurobiology continues to provide new sources of inspiration for AI. People can become experts in the game of go, guided by much less experience than what AlphaGo needs to achieve such a level, so there is still room for improvement of algorithms.

In addition, most of the power of AlphaGo is based on the technique of the back propagation method of the error, which helps it to correct errors. But the connection between it and learning in the real brain is not yet clear.

What's next?

Game Go has become a convenient development platform for optimizing these learning algorithms. But many real-world problems are much more disorderly and have fewer opportunities for self-learning (for example, self-governing cars).

Are there any problems to which we can apply the existing algorithms?

One example is the optimization of controlled industrial conditions. Here the task is often to perform a complex series of tasks, satisfy a variety of criteria and minimize costs.

Until the conditions can be accurately modeled, these algorithms will learn and gain experience faster and more efficiently than people. You can only repeat the words of DeepMind: I really want to see what will happen next.

The article is based on materials .

- Get link

- X

- Other Apps

Comments

Post a Comment